Case Studies

35+ Projects Delivered

Research

Technical Articles · 100+ Posts

White Papers · Strategic Briefings

Research · 3 Papers

Ionio partnered with a Europe-based retail analytics company to build the future of retail before the tools existed. We implemented function calling and digital twins months before they became industry standards.

Ionio partnered with a Saudi-based retail analytics company to build the future of retail before the tools existed. We implemented function calling and digital twins months before they became industry standards.

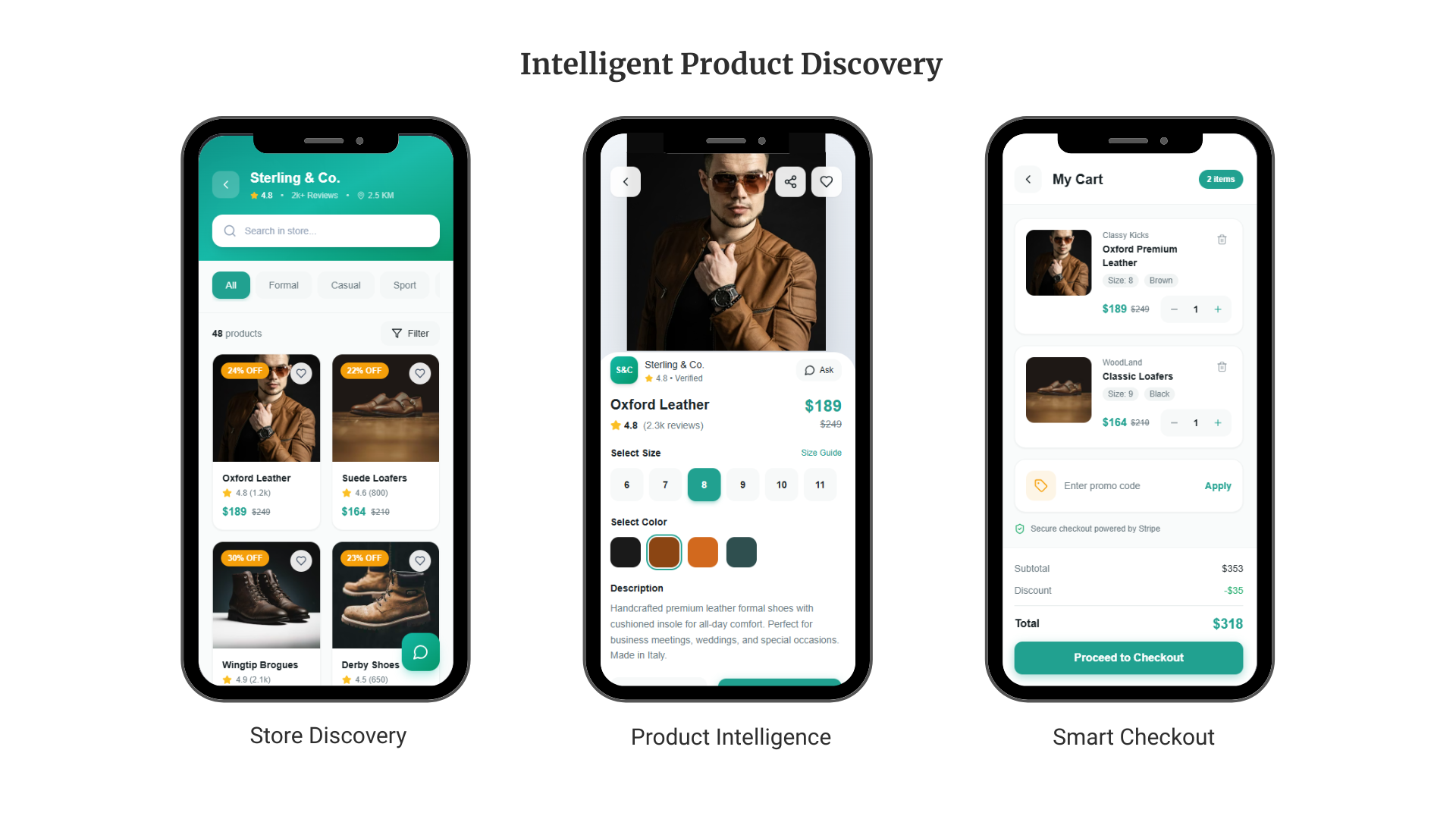

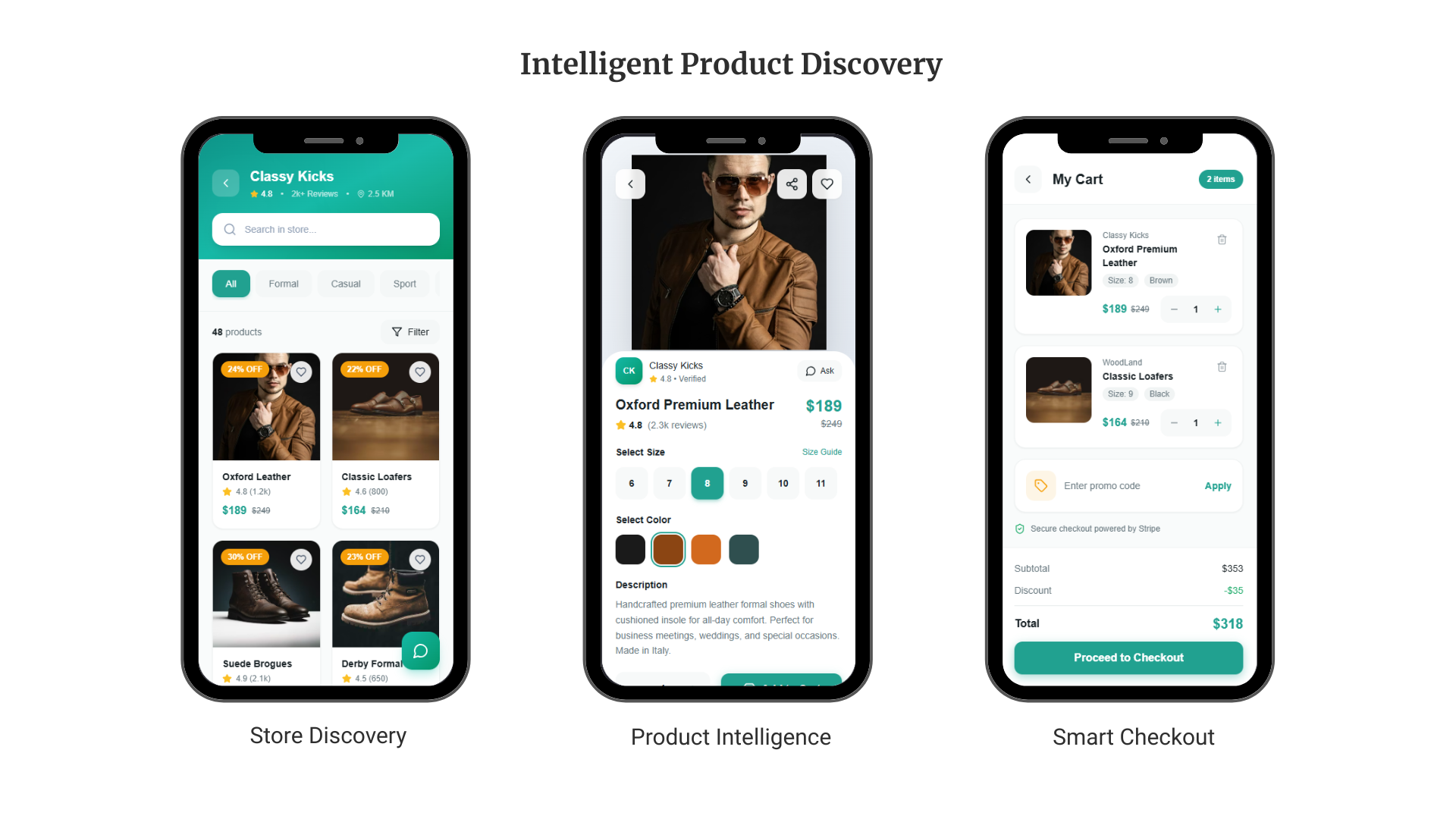

A mid-market retail analytics platform serving 2M+ monthly active users was bleeding value at the discovery layer. Shoppers searched. They didn't find. They left.

The platform had built a successful business connecting shoppers to physical retail locations across the region. But the experience was manual, fragmented, and increasingly outdated. Users searching through a catalog of 1M+ products had to cross-reference inventory across mall locations themselves.

The deeper problem was data. Nine years of behavioral signals—purchase histories, browse patterns, loyalty interactions—sat scattered across 35+ microservices and 150+ API routes. A shopper could buy baby products for three years, and the platform would still show them generic recommendations.

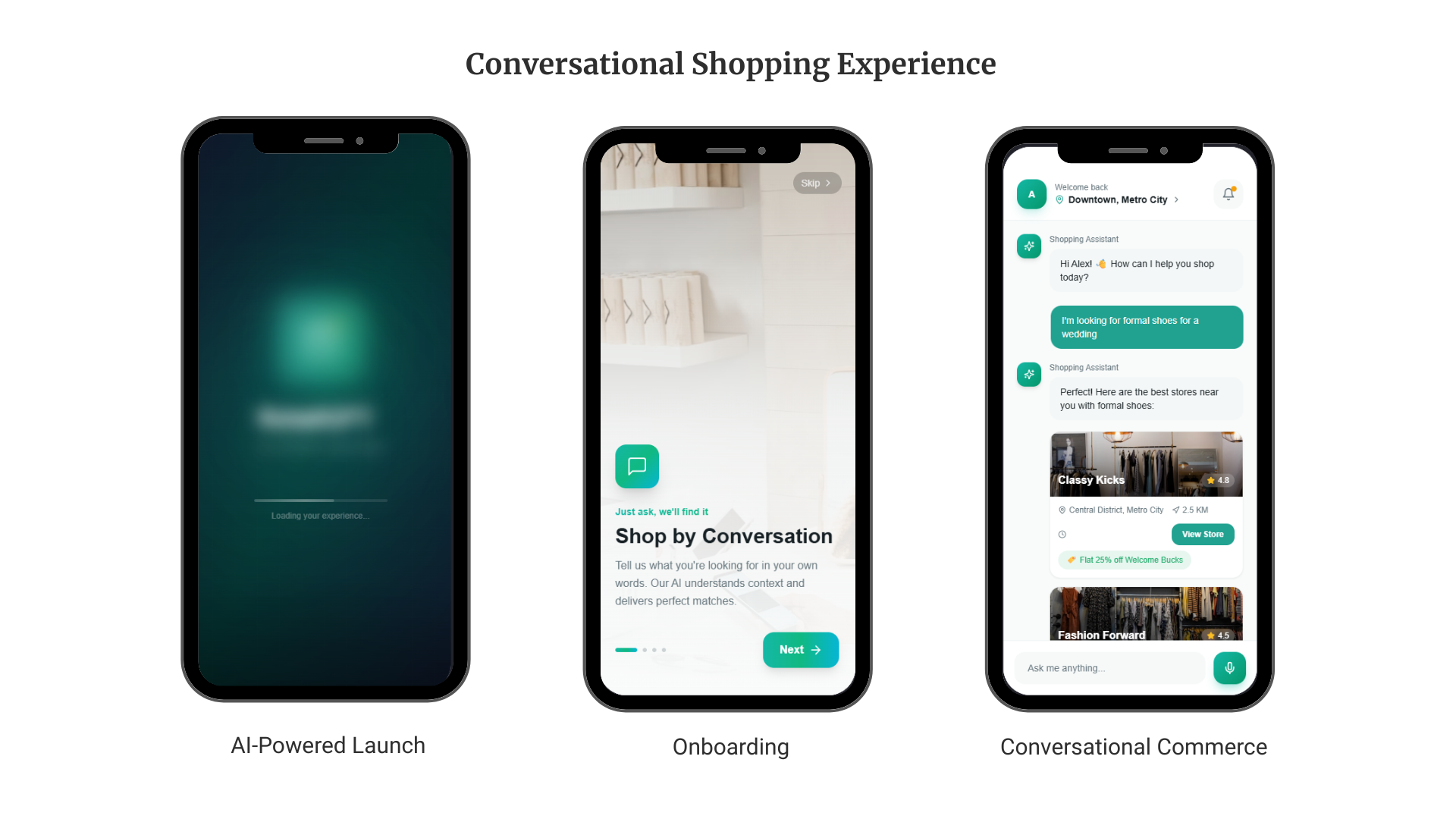

The client's vision: build a conversational AI layer that could finally unlock this data. Not a chatbot. Infrastructure for prescriptive commerce.

The Catch: In late 2023, the tools to build this didn't exist yet. LLMs couldn't reliably call external functions. Voice-to-voice AI was experimental. Building persistent customer memory—storing preferences, relationships, dietary restrictions—required custom architecture that no off-the-shelf solution could provide.

This wasn't a wrapper around ChatGPT. The project required solving five problems that the industry hadn't standardized solutions for. We started in late 2023—months before the tooling would catch up.

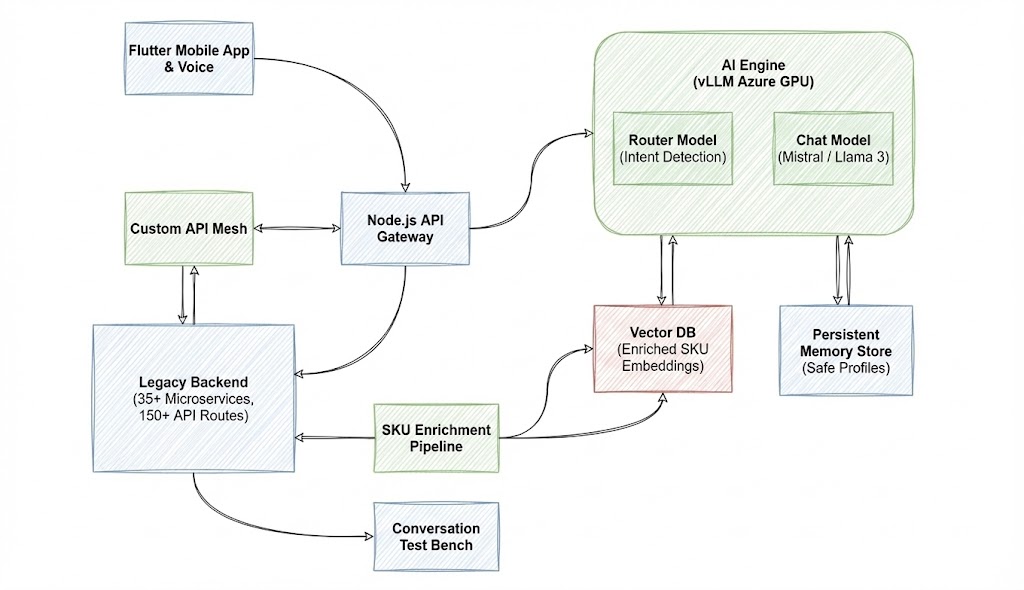

The AI needed to do more than generate text. It needed to query inventory APIs, check loyalty balances, search products by attribute, and calculate shipping estimates—all within a natural conversation. But when we started, OpenAI's function calling was either non-existent or unreliable for production. The open-source models we needed (for cost and compliance) had no native tool-use capabilities at all.

The platform's backend had evolved through multiple technology generations. 35+ microservices. 150+ API routes. Authentication patterns that changed depending on which era the service was built. The backend team was fully occupied keeping production stable—they couldn't dedicate resources to building new integration layers for an experimental AI project. Whatever we built had to work around their constraints, not require them to change.

Product catalogs in retail are notoriously messy. Missing descriptions. Inconsistent categorization. Nutritional data that exists for some products but not others. When you're building semantic search and personalized recommendations, data quality determines your ceiling. The catalog had a million products. Most of them had incomplete or inconsistent metadata. You can't recommend 'gluten-free snacks for kids' if your snack products don't have allergen tags.

The platform served multiple retail brands. Shoppers expected personalization—but brands couldn't see each other's customer data. The AI needed to know 'this shopper prefers organic products, has a child with a nut allergy, and typically shops at Mall X on weekends'—without that context ever exposing raw PII to inference pipelines or leaking across brand boundaries.

Every brand on the platform had a distinct voice. The luxury cosmetics brand communicates differently than the budget grocery chain. The platform's own chatbot had its own personality. The AI needed to shift between these voices seamlessly based on context—and do it consistently across millions of conversations without requiring separate fine-tuned models for each tenant.

"We weren't building features. We were building the infrastructure that would make those features possible—months before the rest of the industry had the tools to even attempt it."

The solution architecture operated across two layers—a data intelligence layer and an experience layer—connected through a real-time API mesh we built to bridge the legacy backend.

To solve function calling without native support, we built a two-model system. The first model handled natural conversation, while the second specialized in tool routing. The conversation model would generate intent; the routing model would execute it.

This architecture shipped 8-12 months before function calling became an industry standard.

Instead of passing raw customer data through inference, we built an abstracted context layer. The system stored preferences, family relationships, and behavioral patterns as anonymized attributes.

We shipped this in early 2024. ChatGPT launched its memory feature in November 2024—eight months later.

We built a data pipeline that processed the full 1M+ product catalog. For each SKU, we generated AI descriptions, sourced nutritional data, and applied hierarchical tagging.

We created brand voice profiles that captured tone, vocabulary constraints, and formality levels. This handled multi-tenant personality switching through structured prompting.

We structured the engagement in four phases, designed to deliver incremental value while managing technical risk.

Standard tooling didn't exist for what we needed. We built three internal systems that made the project possible—and that we now use across all our retail AI engagements.

To build effective prompts and calibrate brand voice personalities, we needed high-quality training data. We built a platform where team members could have conversations with the model, annotate responses, flag quality issues, and generate synthetic variations. This data fed directly into prompt optimization.

Testing conversational AI at scale is fundamentally different from testing traditional software. Unit tests don't catch the ways conversations fail. We built a test bench that simulates buyers with different intents, preferences, and conversation styles—running automated quality assessments across model updates and catching regressions before they hit production.

Not a one-time data cleaning script—an ongoing pipeline. The platform processed the full catalog with AI-generated descriptions, auto-sourced nutritional data, and hierarchical tagging. It ran continuously as new products entered the system, maintaining data quality at scale.

Building at the frontier means building your own tools. We were 8-12 months ahead of industry standard on function calling, persistent memory, and production-grade conversational commerce infrastructure.

No amount of prompt engineering compensates for dirty product data. The enrichment pipeline wasn't optional—it was foundational.

Retail companies have years of valuable data locked in old systems. The ability to unlock that data without requiring platform rewrites is the actual value proposition.

Conversational AI fails in ways unit tests don't catch. The test bench—simulating real buyer conversations at scale—was essential for maintaining quality.

At 2M+ MAU, API costs would have been prohibitive. vLLM deployment gave us the latency and cost structure the business required.

The internal tools we built—the data collection platform, the test bench, the enrichment pipeline—now form the foundation of how we approach every retail AI engagement. These aren't one-off solutions; they're reusable infrastructure that accelerates every project that follows.

The difference matters. Bolted-on AI feels like an add-on. AI-native platforms use intelligence as the organizing principle—the data layer, the experience layer, and the business logic are all designed for machine reasoning, not retrofitted for it.

Ionio partners with platforms in the $5M-$100M ARR range to make that transition. We don't build chatbots. We build the data infrastructure, AI engines, and experience layers that make intelligence central to how your platform creates value.

You're facing a version of what this client faced—valuable data locked in fragmented systems, the need to compete with AI-native experiences, or the gap between your technical vision and the specialized talent required to execute it.

You need a strategic partner who understands both the technology and the business model—not a dev shop that builds what you specify.

You want a chatbot for your dashboard, AI for the press release, or features that OpenAI will commoditize in six months.

We'll tell you that directly.

30 minutes. No deck. We'll talk through your challenge, share some relevant work, and see if it makes sense to work together. Or honestly, just grab coffee and chat—no agenda needed.

Book an Intro Call →Prefer email? contact@ionio.ai