AI-generated content is everywhere, and its presence is growing rapidly. From text and images to audio and video, generative AI (GenAI) is reshaping how we work and create. But this new era of innovation comes with a critical challenge: ensuring content security. How do we know what's real and what's not? How do we protect intellectual property and combat misinformation when AI can create hyper-realistic content that's nearly impossible to distinguish from human-made work?

The answer lies in a powerful new technology: generative watermarking. This isn't your average digital watermark; it's a deeply integrated approach designed to embed invisible, verifiable signals directly into AI-generated content at the very moment of its creation. For enterprises, this technology is no longer a luxury it’s a necessity for protecting assets, preserving brand trust, and navigating the complexities of an AI-driven world.

The Rise of GenAI in Business

The business world is experiencing a profound shift. Generative AI is no longer just a futuristic concept; it has become a core business tool, with a significant majority of organizations exploring its potential. This widespread adoption is fueled by GenAI's ability to augment human creativity and dramatically boost productivity. We're seeing its impact in everything from supply chain optimization and fraud detection in finance to accelerating drug discovery in pharmaceuticals.

But this rapid adoption, particularly through decentralized Software-as-a-Service (SaaS) applications, has created new security blind spots. The unsanctioned use of AI tools by employees a phenomenon known as "Shadow AI" can lead to the inadvertent exposure of sensitive data. The ease of access to free AI tools and low-barrier integrations has made this a pervasive problem. Beyond Shadow AI, enterprises also face new and evolving risks like prompt injection attacks, where malicious actors trick AI models into revealing confidential information. The FBI has even warned that criminals are leveraging GenAI to scale financial fraud schemes, creating more believable scams.

This dynamic creates a fundamental challenge: a security framework that only focuses on perimeter defense is no longer sufficient. When AI tools are used at the individual level, the focus must shift to securing the content itself, regardless of where or how it was created.

What is Generative Watermarking?

Generative watermarking is a new standard in content security. It's a technique that embeds an imperceptible yet identifiable signature directly into AI-generated content at its point of creation. Unlike traditional watermarking, which is applied after content has been produced, this method integrates content protection directly into the generative AI model itself. This means the watermark is an intrinsic part of the content from the start. These markers serve as a verifiable indicator of the content's AI origin, allowing it to be traced back to its source model or creator.

Beyond Traditional Watermarks

Traditional digital watermarking, while a good concept, is simply not equipped for the age of AI. Conventional methods often involve adding visible logos or embedding metadata that can be easily removed or forged with modern AI tools, sometimes with a single click . For text, a simple paraphrase or translation can completely garble a hidden message.

Generative watermarking, on the other hand, is specifically designed to address the challenges of provenance and authenticity in a world of synthetic media. It embeds a signal that is resilient to modification and intrinsically linked to the content's AI origin.

The Core Principles of Generative Watermarking

An effective generative watermarking scheme is built on three essential security goals:

- Robustness: The watermark must remain detectable even after the content has been compressed, cropped, edited, or otherwise modified. This ensures the mark's long-term utility in establishing content origin.

- Undetectability: The watermark should not noticeably impact the quality of the generated content . Watermarked outputs should be visually or audibly indistinguishable from non-watermarked content produced by the same model, preserving the integrity of the AI's output.

- Unforgeability: It must be computationally infeasible for anyone other than the legitimate model operator to create content with that specific watermark. This prevents bad actors from falsely attributing content to a particular AI source.

These goals are often in a delicate balance. A more robust watermark may require more significant alterations to the content, which can make it less imperceptible . This is an ongoing "cat-and-mouse game" between watermarking techniques and adversarial attacks, one that is becoming more sophisticated with the use of cryptographic principles.

The Two-Step Process: Embedding and Detection

Generative watermarking can be thought of as an invisible seal of authenticity that’s built into AI-generated content. The process has two key stages: embedding (adding the watermark during content creation) and detection (verifying its presence later). Together, they form a cycle of traceability and trust in synthetic media.

Embedding (Encoding the Watermark)

Instead of adding a visible stamp or fragile metadata after creation, generative watermarking works inside the AI model as content is being generated. This makes the watermark an intrinsic part of the output.

Text Watermarking:

When an AI generates text, it can be guided to favor certain words (from a “green list”) and avoid others (a “red list”). To a human reader, the sentence looks completely normal, but the distribution of word choices follows a hidden statistical pattern. This pattern acts like a fingerprint.

Example: If the model is watermarked, it might choose the word “rapidly” instead of “quickly” more often than expected. Alone it’s meaningless, but across thousands of words, the pattern is statistically undeniable.

.png)

Image & Video Watermarking:

In AI-generated images (especially diffusion models), watermarks are embedded in the noise layer that guides the image creation process. A method called Tree-Ring Watermarking hides signals in the image’s Fourier space (like planting rings in a tree trunk). These signals are invisible to the eye but survive edits such as cropping, scaling, or compression.

.png)

Audio Watermarking:

AI-generated speech or music can be watermarked by making tiny, inaudible tweaks to frequencies or timing. Humans hear it as perfectly natural, but specialized detectors can recognize the hidden changes.

.png)

Detection (Verifying the Watermark)

Once a watermark has been embedded, it can later be detected to prove whether content originated from a specific AI system.

Text Detection:

A statistical test is run on the text to measure how often green-list words appear compared to chance. If the frequency is much higher than expected, the system confirms the presence of a watermark. This is measured using a Z-score, where higher values indicate stronger evidence of watermarking.

Image & Video Detection:

For images, part of the generative process can be reversed to extract the hidden signal from the noise space. Even if the image has been edited or compressed, the watermark survives, allowing detection tools to verify it.

Audio Detection:

Specialized algorithms analyze the frequency spectrum or time-domain signals of audio. Subtle distortions introduced during watermarking can be detected with high accuracy, even after the audio has been played, recorded, or compressed

.png)

A Developer's Look: Code Examples and Techniques

To truly understand how generative watermarking works, we need to move beyond theory and look at the practical implementation. While full, production-ready codebases are proprietary, we can examine the core logic behind the most common watermarking approaches.

1. Watermarking Text: A Probabilistic Approach

The foundational idea of probabilistic token watermarking for LLMs is to subtly influence the model's word choices during the generation process. This influence creates a statistical pattern that is virtually invisible to a human but easily verifiable by a detection algorithm.

Here's a simplified, conceptual Python-like code snippet to illustrate the embedding and detection process:

Embedding (Watermark Creation)

import hashlib

import random

from typing import List

def get_green_list(vocab: List[str], secret_key: str) -> set:

"""Generates a pseudo-random 'green list' from the vocabulary using a secret key."""

green_list = set()

for token in vocab:

# A simple hash function to deterministically assign tokens to the green list

if int(hashlib.sha256((token + secret_key).encode()).hexdigest(), 16) % 2 == 0:

green_list.add(token)

return green_list

def generate_watermarked_text(model, prompt: str, secret_key: str, green_list: set):

"""Generates text biased towards green list tokens."""

generated_tokens = []

# Simplified loop for demonstration

for _ in range(100):

next_token_candidates = model.get_next_token_candidates(prompt, generated_tokens)

# Apply the watermarking bias

green_candidates = [t for t in next_token_candidates if t in green_list]

# Use a higher probability for green tokens

if green_candidates and random.random() < 0.8: # 80% chance to pick a green token

chosen_token = random.choice(green_candidates)

else:

chosen_token = random.choice(next_token_candidates)

generated_tokens.append(chosen_token)

return "".join(generated_tokens)Detection (Watermark Verification)

To detect the watermark, an algorithm takes the text and the same secret key. It then measures the statistical bias of green-list tokens.

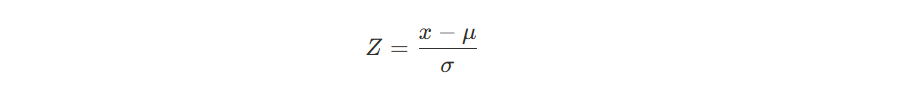

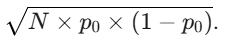

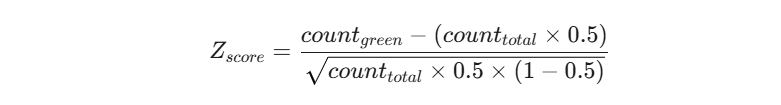

The formula for the Z-score, as described in the text, can be represented as:

where:

- x is the observed number of "green" tokens.

- μ is the expected number of "green" tokens under a null hypothesis (i.e., no watermark)

- σ is the standard deviation of the number of "green" tokens, which is

Therefore, a more complete formula for this specific context is:

from scipy.stats import norm

def detect_watermark(text: str, secret_key: str, vocab: List[str]) -> float:

"""

Detects the watermark by calculating a Z-score.

A high Z-score (e.g., > 2) indicates strong evidence of watermarking.

"""

green_list = get_green_list(vocab, secret_key)

# Calculate the observed number of green tokens and the expected proportion

green_count = sum(1 for token in text.split() if token in green_list)

total_count = len(text.split())

# This assumes a 50/50 split of green/red tokens

expected_green_proportion = 0.5

# The standard deviation for a binomial distribution

std_dev = (total_count * expected_green_proportion * (1 - expected_green_proportion))**0.5

# Calculate the Z-score

z_score = (green_count - (total_count * expected_green_proportion)) / std_dev

return z_score2. Watermarking Images: The Invisible Fingerprint

For images, the watermark is often a subtle, structural pattern embedded into the image's "frequency space" rather than its raw pixels. This makes it resilient to common image modifications like resizing or cropping, which operate on the pixel domain but don't completely destroy the underlying frequency information.

How it works (Simplified):

- Transform: The image is converted from the standard pixel space (a grid of colors) into the frequency space using a Fourier transform. Think of this as breaking the image down into its fundamental building blocks of patterns and waves.

- Embed: An imperceptible, secret signal (the watermark) is added to the high-frequency components. These are the details of the image that are least visible to the human eye.

- Inverse Transform: The frequency data is converted back to a pixel-based image. The result is an image that looks identical to the original but contains the hidden watermark.

- Detection: To verify the image, the process is reversed. The image is transformed back into the frequency domain, and a detector looks for the specific, embedded signal.

The "Cat-and-Mouse" Game: Watermark Removal

The user is right to ask about the challenges of watermark removal. This is a critical field known as adversarial attacks. Here's a brief overview of common removal techniques:

- Simple Paraphrasing (Text): The easiest way to remove a text watermark is to simply have another AI model paraphrase the content. This changes the word choices, erasing the original statistical pattern.

- Image-to-Image Re-Generation (Images): Attackers can use a diffusion model to "re-generate" a watermarked image. By slightly altering the prompt or using a low-quality starting image, the model essentially strips away the original watermark while preserving the visual content.

- Aggressive Compression: Extreme compression (like low-quality JPEGs) or heavy filtering can destroy the subtle statistical signals of a watermark, as the process discards the high-frequency data where the watermark is stored.

This is why the "Robustness vs. Imperceptibility" trade-off is so critical. The most advanced watermarking techniques use sophisticated, multi-layered embedding to survive these attacks while remaining invisible.

Does Watermarking Affect Output Quality?

One of the biggest questions enterprises ask is: “Will watermarking make my AI-generated content look or sound worse?” The answer is reassuring: no when done right, generative watermarking is invisible to end users.

- For Text: Readers won’t notice a thing. The model still writes fluent, natural sentences. The watermark only introduces subtle statistical patterns that can be detected by algorithms, not by human eyes.

- For Images & Video: Watermarks are hidden in the noise space or pixel-level adjustments, invisible to the human eye. Even after common modifications like cropping, resizing, or compression, the content looks identical but still carries its hidden signature.

- For Audio: Whether speech, podcasts, or music, the watermark operates at imperceptible frequencies. To listeners, the audio remains crisp and natural.

The Balance: Robustness vs. Imperceptibility

Effective watermarking must strike a delicate balance between:

- Robustness: Surviving edits, paraphrasing, or platform compression.

- Imperceptibility: Ensuring no visible or audible difference in the content.

- Standardization: Making the watermark consistent across content types and enterprise workflows.

This is often described as a cat-and-mouse game: if a watermark is too subtle, it risks being erased; if it’s too strong, it risks being noticed. State-of-the-art techniques like statistical text watermarking and Tree-Ring image watermarking aim to sit in the sweet spot strong enough for verification, invisible to users.

Why Enterprises Should Care

Beyond the technicalities, generative watermarking is a strategic tool for any enterprise operating in an AI-driven landscape. It addresses some of the most pressing business risks today.

Protecting Intellectual Property

In a world where AI-generated content can be used to create proprietary synthetic data, watermarking provides a critical layer of defense against IP theft. By embedding a verifiable signature, it becomes much harder for unauthorized parties to falsely claim ownership of original AI-generated content. This is particularly important in a legal landscape where the copyright of AI-generated work is still complex and often hinges on the extent of "human creative contribution". Watermarks provide concrete, verifiable evidence of content origin, which can be invaluable in legal disputes.

Safeguarding Brand Reputation

In an era of deepfakes and misinformation, building and maintaining consumer trust is paramount. Generative watermarking offers a powerful mechanism to ensure transparency about AI's involvement in content creation. By providing a machine-readable signal of AI origin, brands can proactively address public skepticism and demonstrate a commitment to authenticity. This capability is part of a "trust by design" framework where content becomes "self-aware," carrying its own identity and rules. This helps mitigate the risk of a brand being associated with or falling victim to deceptive content.

Meeting Regulatory Requirements

The global regulatory landscape for AI is rapidly evolving, with a clear trend toward mandating content marking and provenance. Regulations like the EU AI Act and the US Executive Order on AI explicitly require some form of content identification and traceability. Generative watermarking is widely seen as a leading technical solution to meet these emerging legal obligations. By adopting watermarking solutions, enterprises can embed "compliance by design" into their AI systems, ensuring that their content adheres to legal requirements from the moment it is created.

Ensuring Content Accountability

Generative watermarking is a pivotal technology for establishing content accountability. It creates a tamper-resistant and verifiable record of content origin. Unlike external metadata that can be easily stripped, the watermark is intrinsically linked to the content, making it a reliable source of truth. This is particularly important for mitigating liability in scenarios where AI-generated content is misused. For example, if an AI platform is used to generate synthetic data for fraudulent purposes, the company that created the data could be held liable. Automatically watermarking such data helps deter bad actors and allows companies to maintain a clear record for auditability and legal defense.

Enterprise Use Cases

Generative watermarking is a versatile tool with applications across many industries.

A Look at a Real-World Use Case

Consider the financial sector. Banks and investment firms use vast amounts of data to train their AI models. However, using real customer data for this purpose is a massive privacy and security risk. To solve this, they use Generative AI to create synthetic tabular data fabricated data that statistically mirrors real customer information but cannot be traced back to individuals. This synthetic data is invaluable, but its use comes with a new risk: what if it falls into the wrong hands?

This is where watermarking comes in. By automatically embedding an invisible watermark into every row of the synthetic data as it is generated, the financial firm can keep tabs on it. This not only protects the data from being stolen and used to train a competitor's model but also deters bad actors from using it for fraudulent activities, like deceiving regulators or investors. In the event of a legal dispute, the watermark provides clear, verifiable evidence of content origin, protecting the company from potential liability.

Marketing & Branding: Securing AI-Generated Campaigns

Generative AI is a powerful tool for creating marketing content, from ad copy to visual campaigns. Watermarking helps brands maintain authenticity by proving the origin of these assets, ensuring they align with brand guidelines and values. This technology also enables forensic tracing to identify the source of leaked campaign materials or track unauthorized use of brand assets online.

Media & Publishing: Combating Fake News and Deepfakes

In media and journalism, watermarking provides a critical mechanism for authenticating visuals and combating misinformation. By embedding a verifiable signature, journalists and fact-checkers can trace media back to its source, even after it has been shared across platforms. This is particularly vital in the fight against deepfakes, as watermarks can be applied at various stages of the deepfake workflow, providing critical evidence for legal action against perpetrators.

Finance & Legal: Ensuring Report and Document Integrity

In the highly regulated worlds of finance and legal, generative watermarking is essential for data integrity and accountability. As discussed in the use case above, it is used to watermark valuable synthetic data. Beyond that, watermarks can be applied to critical documents and reports, making them tamper-resistant and auditable. The embedded information can include the AI model used or generation source, creating a clear and immutable audit trail for sensitive documentation.

E-Commerce & Retail: Verifying Product and Design Assets

For e-commerce and retail, watermarking helps verify the authenticity of digital assets like product images and designs. By embedding invisible identifiers, businesses can track where their images appear online and maintain consistent brand representation. Watermarking also plays a significant role in the digital collectibles and NFT market, helping to prove ownership and protect against counterfeiting.

.png)

The Bigger Picture: Integrating Watermarking into Enterprise Workflows

The real value of generative watermarking for an enterprise isn't in a single technical solution but in how it's integrated into the entire AI-driven workflow.

Enterprise Integration

- API-Level Watermarking: The most seamless approach is for AI providers (like OpenAI, Google, and Amazon) to offer watermarking as a core, opt-out feature on their APIs. This ensures that every piece of content generated for a business is marked from the moment of creation.

- On-Premises Models: For companies using their own, self-hosted LLMs and diffusion models, the watermarking algorithm is integrated directly into the model's architecture. This ensures a higher level of control and security, as the secret key never leaves the company's network.

Auditing and Traceability

- Real-Time Monitoring: Businesses can deploy real-time monitoring tools to scan for their watermarked content online. This allows them to instantly identify where their IP is being used, a key feature for legal enforcement and brand protection.

- Digital Audit Trails: By linking the watermark to a specific user and timestamp in an internal database, companies can create an unalterable audit trail. This is crucial for accountability and liability management, especially in highly regulated industries.

By building these workflows, enterprises move from simply detecting AI content to managing it, ensuring that this powerful technology is used responsibly and securely. This proactive, "trust by design" approach is the future of content in the age of generative AI.

Challenges and Limitations

While the potential of generative watermarking is immense, it is not without its challenges.

- Robustness vs. Imperceptibility: There is a fundamental trade-off between how robust a watermark is and its imperceptibility. A strong watermark is more resistant to attacks like compression or cropping but may be more noticeable to a human. Conversely, a subtle, invisible watermark is easier to remove.

- Detection Accuracy: Common transformations like compression and cropping can easily destroy subtle watermarks, rendering AI-generated content undetectable.

- Standardization: The lack of a single, industry-wide standard for watermarking creates a fragmented ecosystem. This lack of interoperability makes it difficult for a watermark embedded by one system to be readable by another.

- Ethical Concerns: The ability to trace content back to its source, while a benefit for accountability, also raises privacy concerns. For instance, a human rights defender using AI to document abuses could be identified by an oppressive regime if the content is watermarked. This highlights the need for privacy-aware watermarking frameworks that balance traceability with individual safety and freedom of expression.

Future Outlook

Despite the challenges, the future of generative watermarking is promising. The path forward is likely to be paved by two key developments.

First, AI content standardization will be driven by global regulations like the EU AI Act, which will compel AI developers to move toward a unified and reproducible framework for watermarking. This will lead to the creation of shared benchmarking libraries that can be used to consistently evaluate and compare different watermarking solutions.

Second, the most significant advancement will be the convergence of generative watermarking with blockchain technology. While a watermark provides the intrinsic "fingerprint" of content, a blockchain can serve as an immutable "vault" to securely store the verifiable proof of creation and ownership. This combination of a deep, integrated signal with an unchangeable public ledger creates a comprehensive "trust by design" framework for the digital world. This synergy will not only enhance security and accountability but also provide a reliable foundation for content monetization and a more trustworthy digital ecosystem.

Conclusion

The era of generative AI is here, bringing with it incredible opportunities and complex challenges. As AI-generated content proliferates, the need to ensure its authenticity, provenance, and integrity becomes paramount. Generative watermarking is a foundational technology that provides a proactive solution, embedding trust and accountability directly into the digital fabric of content. By strategically adopting watermarking and preparing for a future where it converges with technologies like blockchain, enterprises can protect their intellectual property, preserve brand trust, and confidently navigate the next frontier of digital innovation.

Have questions or want to discuss strategies tailored to your product or organization? Schedule a call with our team we're happy to collaborate on solutions that scale.

.png)